Reflections from the e-Assessment Association (eAA) Conference: Insights, Connections, and New Perspectives

The 2023 edition of the e-Assessment Association Conference, which took place in London, June 6-7, offered valuable insights, connections, and fresh perspectives to industry professionals, encouraging collaboration and knowledge exchange. It covered a broad range of topics, including language bias in AI and inclusive assessment practices, exam security enhancements, innovative test design, guidelines for validity and fairness in technology-based assessment, skills measurement in the age of AI, personalized exam timing based on readiness, VR and AI integration in medical assessments, automation in item authoring, and potential threats and legal implications of AI in assessment.

cApStAn Founding Partner Steve Dept, who attended the e-Assessment conference as an invited speaker, shares “I met people I had been wanting to meet and who were liberal with their time and their insights. I met people I didn’t expect to meet, and the conversations opened new perspectives. I learned a lot and shared a lot and got a better sense of how my peers in the assessment world are reconciling (or striving to reconcile) DEI, AI, LLMs, NMT, AIG, ML. I was invited to speak (thank you Tim Burnett 🚀!) and my talk had a surprising resonance. Next year I’ll be there for sure. Thank you to the organizers and to all the pioneers in this industry!”

What follows is a selective summary of the conference.

Day 1

Meaning shifts and perception shifts

The highlight of the meeting, for us at cApStAn, was Steve Dept’s very well received and well attended talk on Day 1 on “Meaning Shifts, Perception Shifts: Language as a component of inclusiveness”. He set the scene by explaining how bias can arise in the translation of tests and assessments. Meaning shifts are mostly language-driven: a 1:1 semantic mapping in translation is elusive at best, he explained. Perception shifts are culture-driven: the same information carries a different load in different cultures (this can occur also in monolingual settings). Steve presented cApStAn’s “upstream” linguistic quality assurance process, which mitigates potential bias by identifying meaning and perception shifts before the instruments are finalised, and neutralising those before the assessment goes to production mode (read more about cApStAn’s linguistic quality assurance processes at this link).

Steve also explained why AI should be used with discernment. He shared interesting examples of gender bias in Generative AI, which is a product of the data on which the language model is trained (e.g., the consistent assumption that nurses are female and doctors are male).

Steve Dept’s presentation

Exam security

Exam security is a critical concern in the field of tests and assessments. In this session, titled “Prevent, deter, detect, react”, Ben Hunter, Vice President of Sales at Caveon (presenting remotely), and Paul Muir, Vice President of the e-Assessment Association and Chief Strategy and Partnership Officer at Surpass Assessment, addressed the key aspects of preventing, deterring, detecting, and reacting to security threats throughout the exam process:

- -Pre-exam: apply latest approaches to exam security, such as ID/person verification, web-patrol, and test form design techniques, including randomization of question-and-answer order and Linear On-The-Fly testing.

- -During the exam: learn about the people, processes, and technological measures that can be implemented to ensure a secure exam environment.

- -Post-exam: explore the significance of data forensics in safeguarding against exam fraud, and gain insights into the latest tools available in the industry.

Traditional test design

Marcel Britsch and Anthony Nicols from the British Council recapped developments over the past year and acknowledged that the traditional ‘test-design first’ approach just doesn’t work for what they want to do and share. They presented the British Council’s new team-driven and collaborative approach, involving experts from various disciplines. Through continuous cross-functional collaboration and co-creation, including assessment, product, operations, marketing, commercial, and compliance, this approach aims foster an environment where the academic construct sets the goal. This dynamic interplay allows technology and theory to complement each other, driving innovation and enabling the emergence of new possibilities.

Team-driven approach presented by the British Council

ITC-ATP Guidelines for Technology-based Assessments

During the afternoon session of Day 1 the International Test Commission (ITC) and Association of Test Publishers (ATP) guidelines on tech-based were presented by John Kleeman, EVP of Learnosity and Founder of Questionmark,and John Weiner, Chief Science Officer at Lifelong Learner Holdings, parent company of PSI and Talogy. The speakers, who were both directly involved in their development, explained that the guidelines are the result of a multi-year collaboration with over 100 expert authors and reviewers. They provide guidance for design, delivery, scoring and use of digital assessments, while ensuring validity, fairness, accessibility, security and privacy. We are proud that our own Steve Dept was the author of the guidelines’ chapter on translation and adaptation.

These guidelines are a joint initiative by the International Test Commission (ITC) and the Association of Test Publishers (ATP). Steve Dept has represented cApStAn at all ITC Conferences since 2006 and it was a great honour for Steve Dept to be co-opted as a member of the ITC Council (see our article at this link)

John Kleeman presenting the ICT/ATP guidelines

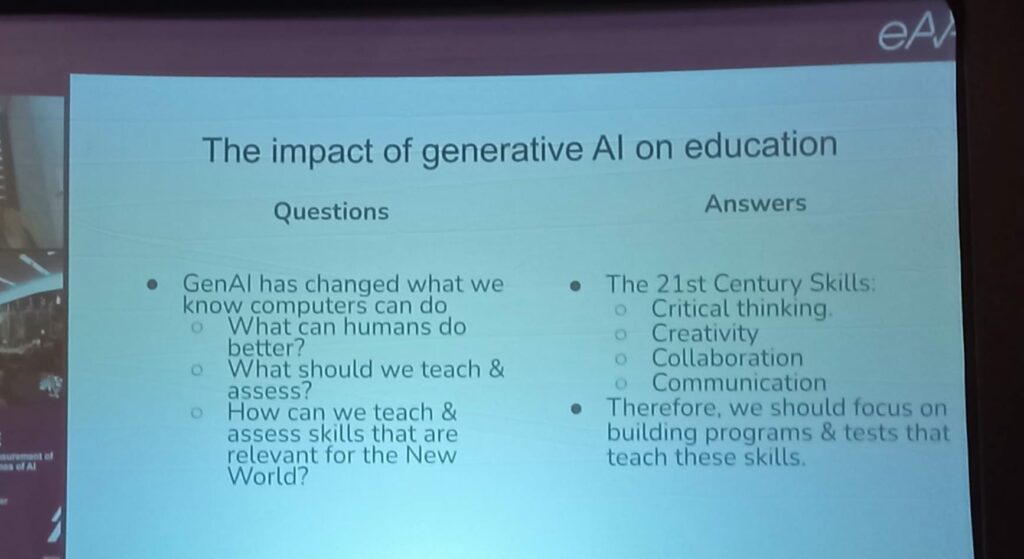

Measuring skills in the era of AI

In one of the Day 1 closing sessions Alina von Davier, Chief of Assessment at Duolingo, attending remotely, addressed the issue of what topics we should focus on in future teaching, learning, and assessing in times when machines can write documents and poems, compute and produce art…. What skills will be necessary in this “new world” and how can we measure them? In her presentation Alina described the use of generative AI and computational psychometrics for assessment development within a theoretical ecosystem. She illustrated these concepts with examples from a critical thinking assessment and an online course that indirectly measures several 21st century skills.

Alina von Davier’s presentation

Day 2

“Stage not age”: timing of exams

The morning keynote was remarkable, says Steve. David Gallagher, CEO of the awarding body NCFE, Northern Council for Further Education, drew on his own personal experience in mainstream education to reflect on how biased the system is towards people like him: he did well because he was good at memorizing and regurgitating. This way he learnt to be totally disengaged, knowing he would get away with it at the time of the assessment. He advocates “stage, not age”. Young people should take exams when they are ready, not at a given point in time. David Gallagher drew the audience in without power point, without visuals, just using storytelling (with a choppy Northwestern eloquence!), comments Steve.

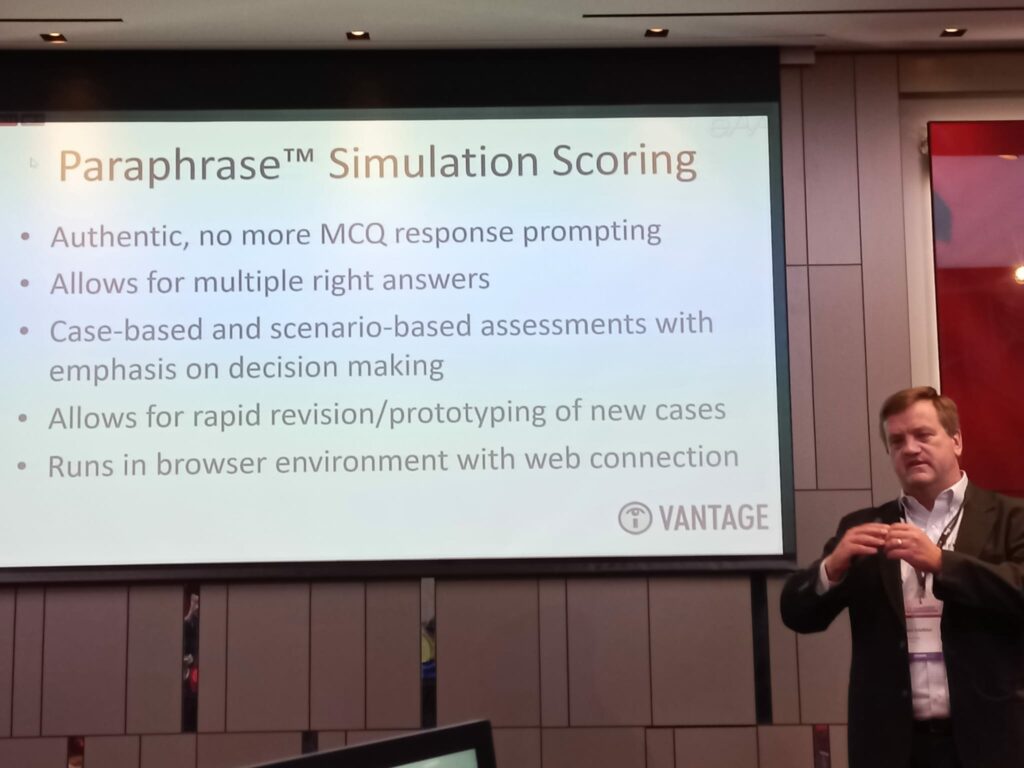

Merging VR and AI in medical assessments

Paul Edelblut, Vice President at Vantage Laboratories delivered another excellent, lively presentation with a fascinating demo about the paraphrase matching power of “Adaptera” during a session with Jeremy Carter, from SentiraXR. The session discussed the use of two key emergent technologies in medical assessments: Virtual Reality (VR) and Artificial Intelligence (AI). The purpose of the demo was to showcase how VR and Augmented Reality (AR), combination with AI, make it possible to blend the authenticity of patient encounters and the reliability of scoring.

Paul Edelblut from Vantage Labs presents “Adaptera”

Automated assessment item authoring

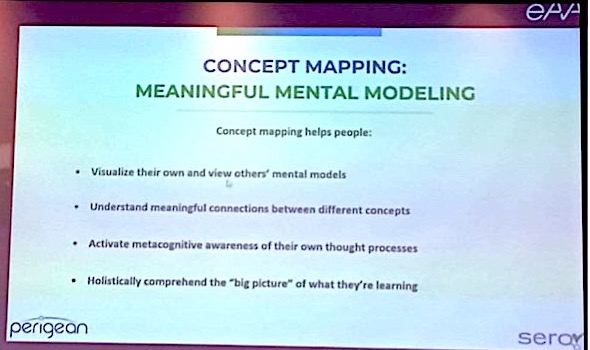

This topic was addressed by Brian Moon, CTO of Perigean Technologies and President, Sero! Learning Assessments. With recent advancements in large language models, Moon says there is great promise for achieving full automation. In addition, concept mapping-based assessments present an opportunity to disrupt the traditional conception of assessments.

Brian Moon on automated assessment item authoring

Potential threats and legal implications of AI

Concerns about the how the use of AI in assessment will evolve were expressed during the Day 2 closing session titled “AI in assessment – is the future out of control?” What are the potential threats and legal implications of AI use in educational and professional settings? As technology continues to evolve rapidly how can the industry stay up do date and also ensure that assessments are accurate, fair and secure? Excellent panel discussion with Marc J. Weinstein -PLLC, David Yunger – Vaital, Isabelle Gonthier – PSI Services, Gavin Busuttil-Reynaud – AlphaPlus, moderated by Sue Martin – TÜV Rheinland, e-Assessment Association.