Deepfake lip syncing technology could help translate film and TV without losing an actor’s original performance

by Pisana Ferrari – cApStAn Ambassador to the Global Village

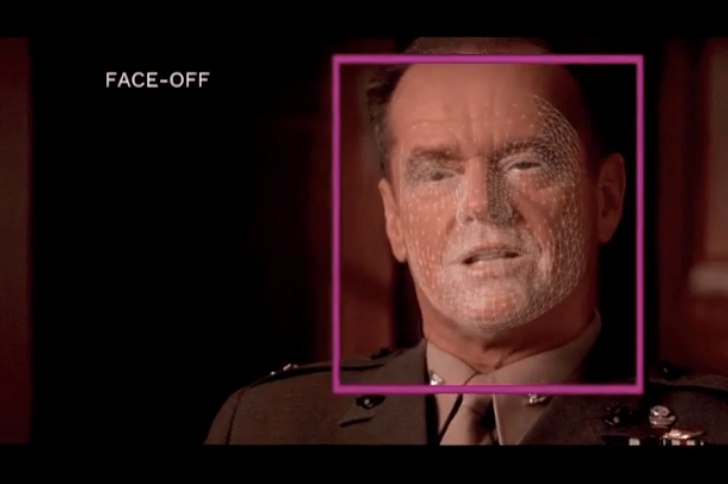

Dubbing or subtitles, which is better? § An age-old debate came back to the forefront in 2020 with the Oscar win for the South Korean film “Parasite”, where, at the awards ceremony, director Bong Joon-ho made a strong case for subtitles. “Once you overcome the one-inch-tall barrier of subtitles, you will be introduced to so many more amazing films”, he said. While subtitles allow viewers to full experience of an actors’ performance they can distract viewers and it can be difficult to read and follow the action at the same time. Dubbing, on the other hand, allows viewers to better concentrate on the movie but can lead to loss of nuance (eg. local accents), and is very dependant on the acting quality of the persons doing the voiceovers. Also, lip syncing – matching a speaker’s lip movements with pre-recorded audio – is difficult, and the match is not always optimal (mistimed mouth movements are common). Deepfake technology may be a game changer in this respect. We normally think of deepfakes as manipulating the entire image of a person or a scene, but the technology used by a new start-up called Flawless AI, co-founded by film director Scott Mann, focuses on just a single element: the mouth. Flawless’ machine learning models study how actors move their mouths and then changes the movements to sync seamlessly with the replaced or dubbed words in different languages. The result could be that Tom Hanks looks like he can speak Japanese or Jack Nicholson looks like he can speak French. The promo video developed by the company features a French dub of the classic 1992 legal drama A Few Good Men, starring Jack Nicholson and Tom Cruise. And it is very impressive. But, personally, no matter how flawless dubbing may become in future, nothing can replace the experience of seeing actors express themselves in their own language, with their own particular accent, tone and nuances of voice. And their original face mimicry and lip movements. So my preference would go to subtitles, any day!

See Flawless’ promo video at this link

Future prospects

Mann says he was inspired to come up with the tool when he saw how dubbing affected the narrative cohesion of his 2015 film “Heist”, starring Robert De Niro. He says that their tool will give creators back control and enable stories to be told exactly as they were intended. “New opportunities, untapped markets, and exciting revenue streams are all ready to be explored”. Deepfake dubs are cheap, he says, and quick to create. They could be of particular interest for remakes. Flawless co-founder Nick Lynes gives the example of the 2020 Oscar award-wining Danish film Another Round, which stars Mads Mikkelsen. After its success at home and on the international award circuit, the film is set to be remade for English-language audiences with Leonardo DiCaprio in the main role. What of the future? Currently most media streaming companies offer both subtitles and dubbing, which allows the users to choose according to their preferences. Netflix, in particular, offers both subtitled and dubbed content, and the audiences for each have been accordingly expanding. Flawless says it’s already got a first contract with a client it can’t name, but there’s no timeline yet for when we might see it used in a film, that will be the real test.

Footnotes

- Subtitles translate a film’s dialogue into a written text superimposed on the screen, allowing viewers to read along while following the actors as they speak in their native language. Dubbing consists of translating and lip-syncing the original audiovisual text, where the original language voice track is replaced by the target language voice track.

See also

Sources

“This AI tool can accurately recreate lip syncing so dubbed movies won’t look bad anymore”, Rollo Ross, IOL, May 19, 2021

“AI Tool Could Change Language in Films”, John Russell, VOA news, May 23, 2021

“Deepfake dubs could help translate film and TV without losing an actor’s original performance”, James Vincent, The Verge, May 18, 2021