Translatability of test items and challenges in scoring and equating adapted tests

A Q&A session between Dr Avi Allalouf and our CEO, Steve Dept

Dr Avi Allalouf from NITE (the National Institute for Testing and Evaluation) in conversation with our CEO Steve Dept: he tells us why the Israeli Psychometric Entrance Test is translated into six different languages; he shares his views on translatability of test items assessing verbal and quantitative reasoning; and explains the challenges involved in scoring and equating adapted tests.

1. Steve: Why is the Israeli Psychometric Entrance Test (PET) translated into several languages?

Avi: In general, admissions to higher education in Israel are based on two criteria, usually equally weighted: matriculation exams and the PET. The PET comprises three domains: Verbal Reasoning, Quantitative Reasoning, and English as a foreign language. It is developed in Hebrew and translated into and adapted for Arabic, French, English, Russian, Italian and Portuguese. The Arabic test form serves the large Arab minority (about 20 percent of the population in Israel), and the other adapted test forms serve mainly the non-Hebrew speaking immigrant population. The rationale for the decision to translate and adapt the PET is that admissions should be based on merit.

Generally speaking, unless the aim is to test foreign language proficiency, a test should be administered in the examinee’s native language, since examinees can best express their ability in their mother tongue. Examinees who take a test in a language other than their native language sometimes cannot fully comprehend instructions, tasks and questions. They also may not know some of the basic concepts and idioms that are familiar to native speakers of the language. Consequently, they are not able to adequately demonstrate their knowledge and their quantitative & writing skills. In cases where an examinee is not fully proficient in the language in which the test is administered, the reliability and validity of the measurement instrument could be compromised, and if a score is less reliable and the inferences drawn from it are not valid, then the test is less fair.

2. Steve: Now that more is known about potential causes of differential item functioning (DIF), can we do more to determine whether a test item is translatable?

Avi: Indeed, we now know more about the potential causes of DIF in translated items. During the past three decades, numerous studies have been conducted to identify the causes of DIF in translated items, and key questions have been raised, such as:

What are the sources of DIF? How can we identify them? Is DIF associated with specific item types? How is DIF connected to item content? How can we reduce the occurrence of DIF?

However, using the accumulated knowledge to reduce DIF in test translation is not an easy task, even for experienced translators who have been informed of the conclusions of studies regarding the potential causes of DIF. Therefore, even though the knowledge gathered may help in determining whether a test item is translatable, it unfortunately can do so only to a limited degree. To prove if an item functions differentially across languages, what is needed is sufficient data on the performance of that item in a test. Verbal Reasoning questions, which often involve connotations that differ across languages and cultures, might shift in meaning as a result of translation while Quantitative Reasoning questions are usually not substantially affected or changed by translation.

3. Steve: What can you say about the translatability of verbal reasoning test item types?

Avi: Item types differ in their translatability – that is, in their capacity to retain psychometric characteristics across translated forms. Test items that contain idioms and expressions often cannot be translated at all. Cultural relevance may affect any item, but there are other factors that affect specific item types. The amount of text an item contains is a significant factor: items with less text tend to have more translation DIF (every single word may have an adverse effect on the translation), while items with more text are more likely to retain their meaning (the influence of single words is small in the larger context).

This is why Reading Comprehension items have high translatability. For the same reason, logic questions with a large amount of text have high translatability too. Verbal Analogies, which are very short (ten words for a four-alternative multiple- choice item) have low translatability – since any differences in word difficulty cause DIF. In another popular item type – Sentence Completions – there is usually a need to change the sentence structure which affect the psychometric characteristics of the translated item.

4. Steve: What can you say about the translatability of mathematics test item types?

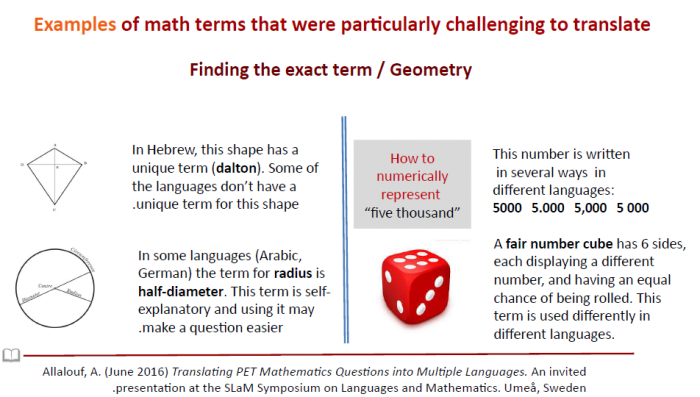

Avi: Translating Quantitative Reasoning — or math — items is less complicated and requires less time than translating Verbal Reasoning items. In math questions it is essential to translate mathematical terms precisely; however, in some cases an exact translation does not exist. In these cases, there would seem to be two options: (1) to “do the best one can” and find the closest term in the target language or (2) to “give up” – if possible – and not translate the item. Verbal reasoning ability affects performance on math items with high verbal loading. Translators who have accumulated substantial experience are able to deal with the typical problems in translating math items. The following are examples of challenges involved in translating math items.

5. Steve: Once the test is translated and has been administered in different languages, you need to score the responses and report the results. What are the challenges in scoring and equating adapted tests?

Avi: When a test form is adapted from a source language to a target language, the two forms are usually not psychometrically equivalent. If the DIF analysis reveals that the psychometric characteristics of certain items have been altered by the translation, steps must be taken, but exactly what steps will depend on the assessment’s linking design. If common items are used for the linking, the DIF items should not be used for linking. One should be careful with any steps taken since deleting too many DIF items can result in an anchor that is too short for equating purposes. If there is no linking, the non-DIF items should be used for scoring both test versions (source and target). This will make the source and target versions more similar psychometrically and their scores more comparable.

Before the DIF items are omitted, they should be examined carefully for bias by experts in the subject area, since, in very rare cases, there may be real differences – rooted in culture, knowledge, or experience – in the way a certain language group performs on specific test items. In cases where these differences are regarded as relevant, the DIF items are not to be omitted from the scoring process.

About Dr Avi Allalouf

Dr Allalouf is Deputy Director as well as the Director of Scoring & Equating at NITE. He is responsible for external coordination, operation of assessment centers, and the NITE research fund. In addition, he oversee the process of scoring, equating and score reporting of tests administered by NITE – including the Psychometric Entrance Test (PET) – to institutions of higher education and to examinees. Dr Allalouf have served as the president of the Israeli Psychometric Association and as co-editor of the International Journal of Testing (IJT). He is currently head of the Certification Studies in Psychometrics Program at NITE. His areas of research include: scoring & equating, quality control, differential item functioning (DIF), test adaptation to multiple languages, and the impact of testing on society.

Dr Avi Allalouf on LinkedIn and Researchgate

NITE (the National Institute for Testing and Evaluation) was established by the Association of University Heads in Israel in order to assist in the admissions and placement process for applicants to Israel’s institutions of higher education. In the years since its establishment, NITE has won recognition as a leading institution in the development of testing and evaluation tools – especially admissions, placement, and accreditation tests for institutions of higher education. Other objectives and activities include: (1) provision of consultation, assessment, admissions, selection and evaluation services to institutions of higher education and other organizations, with the aim of enhancing their knowledge and functioning, and (2) research on topics related to admissions and selection systems in such institutions and organizations.