Multilingual tests: when do you translate, when do you adapt? And how?

by Pisana Ferrari – cApStAn Ambassador to the Global Village

What reasonable investments should you make to move beyond the “just translate it” attitude? When do you translate, when do you adapt? What workflows work best for the translation of assessments? These are just some of the issues addressed at a well-attended webinar organised jointly by leading experts in the assessment field John Kleeman and Steve Dept, CEOs and Founders respectively of Questionmark and cApStAn.

Comparability

When tests are administered in different languages and cultures they must measure the same thing. Straightforward translation doesn’t always work well. Meaning shifts (which are language-driven) and perception shifts (which are culture-driven) may affect the psychometric properties of items and compromise comparability across languages. Tests earmarked for translation often contain idioms and colloquialisms that can hardly be rendered in target languages. This is where “adaptation” (or “localisation”) comes in, i.e. whenever direct translation could put respondents from the target group at a disadvantage or at an advantage. “Fairness” is key to comparability.

Planning

The approach to planning a test translation will differ depending on whether one is designing it from scratch, or whether one is working on an existing test. In the first case specialists should be involved from the earliest stages to identify whether the constructs could have issues in the target languages/cultures and whether proposed item formats need adjusting. Ideally, the test should be designed with this in mind. When there is an existing test it is important to ensure, before proceeding to translation, that the constructs apply in the language/culture. In both cases a “translatability assessment” can help identify potential issues. Time spent optimising the source version “upstream” drives quality of translated texts and saves time “downstream”.

Process

Some examples of useful steps to include in the assessment localization processes are:

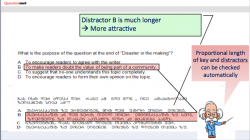

- Project specific rules: rules that can be linked to attributes in the source, e.g. number of characters, text styles, length of response choices (see photo below), gender adaptations;

- Glossaries: terms that need to be translated consistently, fictitious names, terms or expressions that involve some research, acronyms, names of months and years;

- Translation and adaptation notes: translation traps and psychometric traps, specifying which adaptations are required, desirable or ruled out.

Translation workflow

What about the optimal translation workflow? According to the speakers, it is best practice to export the master out of the assessment system, allow translators to work in their preferred translation technology environment (eg. XLIFF) and then seamlessly import the translated tests back into the platform.

Translation verification

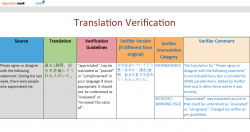

Once the test is translated, an independent quality check of the translation is desirable in order to maximise comparability. Translation verification should compare source and target versions segment by segment, check compliance with translation and adaptation notes, with a focus on sematic equivalence. Ideally, a set of standardised intervention categories should be used to report issues detected by the verifiers, and all issues should be reported in a centralised monitoring tool, as shown in the illustration below.

Key take home messages:

- Translation should be embedded in test design

- Involve specialists (linguists and translation technologists) in the earliest stages of the test translation project

- Time spent

- optimising the source version; and

- preparing the translation/adaption process well will drive the quality of translated tests

- it is best practice to export the master out of the assessment system, allow translators to work in their preferred translation technology environment, and then seamlessly import the translated tests back into the platform

- Organise (at least some) independent quality checks of the translation